How to use GitOps & DevSecOps with Terraform

Here is how I use GitOps and DevSecOps to deliver better Terraform templates more efficiently and securely.

If you are like me, who loves listening to music to boost productivity. Grab yourself some Ed Sheeran songs below!🎵 👇

Intro

We are using Terraform for infrastructure auto-provision. We have a group of developers working on the Terraform templates at the same time.

Challenges We Are Facing

There are three main challenges we are facing:

- Collaboration

- Security

- Reliability

Collaboration

Since there are multiple developers working on the Terraform templates at the same time, everyone has a slightly different copy of the Terraform templates.

There isn’t a single source of truth. It is challenging to coordinate the changes made to Terraform templates.

Security

Every developer needs an AWS user with an administrator role to perform infrastructure testing with the Terraform templates. It means each developer has direct admin access to the AWS cloud, and the credentials are managed by the developer locally.

If one of the developers leaks the credentials, our entire AWS cloud is at risk. The attack surface increases as more developers joining the team to work on the Terraform templates. We also need to manage the AWS users associated with the developers probably.

Reliability

The reliability of changes made by developers is not guaranteed.

We don’t have a systematic way to ensure the changes are made with best practices. There isn’t a systematic way for other developers to verify the changes made by a developer.

There isn’t a way for us to keep track of the changes. If there are some issues with the latest Terraform templates, it is a nightmare to roll back.

Solution = GitOps + DevSecOps

To overcome the challenges mentioned above, I introduce GitOps and DevSecOps into our Terraform development.

GitOps integrates best practices into IaC(Infrastructure as Code) development with a version control system, and DevSecOps ensures a flexible, secure, and efficient CI/CD pipeline.

1 GitOps

GitOps is a software development mechanism that improves developer productivity.

1.1 Gitlab - Version Control System

In this case, I am using Gitlab as a version control system to store the Terraform templates. Gitlab is the single source of truth. All teammates need to push the changes they make to Gitlab. And Gitlab has the latest copy of the Terraform templates.

By doing so, we can version the Terraform templates with commits and branches. We can also easily trace back who made what changes at what time with the Git history.

Thanks to Terraform’s declarative syntax. Each commit contains the Terraform templates that can create the final desire infrastructure. Therefore, it is easy to rollback and to perform disaster recovery.

1.2 GitOps Workflow - Development Flow

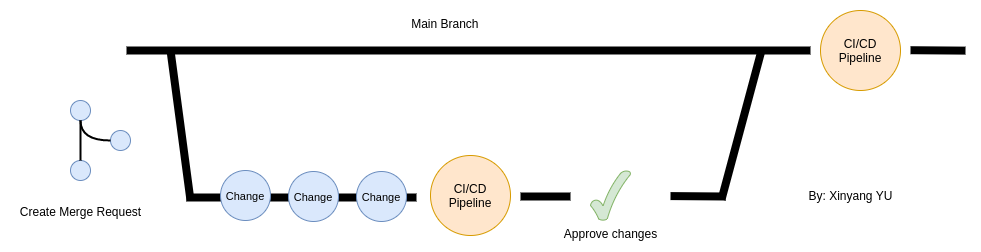

A picture is worth a thousand words, the diagram below visualizing a simple GitOps workflow.

When a developer wants to make some changes to the Terraform templates. The developer can make a feature branch based on the main branch, and create a merge request with the feature branch.

After he pushes the feature branch with the changes to Gitlab, a CI/CD pipeline is triggered to validate the changes and deploy the changes to the testing environment. Once the changes pass the pipeline, another developer will come and review the changes and approve them.

After that, the feature branch is merged back to the main branch, another CI/CD pipeline is triggered to validate the changes and deploy the changes to the production environment.

Therefore, we can see that GitOps ensures changes are made transparently and systematically. Tedious validation and deployment are automated and managed by Gitlab to boost the developer’s productivity. The quality of IaC also improved with the involvement of multiple developers.

In this example, we have the main branch associated with the production environment and a testing environment associated with the feature branch.

The main components of GitOps are Git, Merge Request, and CI/CD Pipeline. We can always add in more Git branches, and associate them with more deployment environments to meet our needs.

2 DevSecOps

DevSecOps is a pipeline mechanism that improves software delivery with security in mind.

2.1 DevSecOps Pipeline

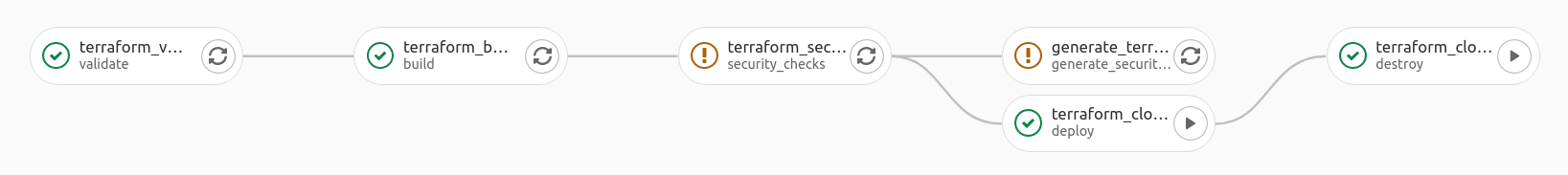

Let’s dive deep into the CI/CD pipeline. There is a total of six pipeline jobs inside this pipeline involving three main components.

The six jobs are:

- Validate - Dev

- Build - Dev

- Security checks - Sec

- Generate security report - Sec

- Deploy - Ops

- Destroy - Ops

The three main components are:

- Gitlab

- Terraform Cloud

- AWS [Amazon Web Services]

2.2 Pipeline Architecture

Below is a diagram showing how seven jobs inside the pipeline involving the three main components.

When new changes are pushed to the feature branch or the main branch, a new DevSecOps pipeline is triggered.

The pipeline flow is described as following:

- The validate job ensures the Terraform template is free of syntax errors. If the job passed, it triggers the build job next.

- The build job uses the Terraform Cloud API key to contact Terraform Cloud to generate an execution plan.

- Terraform Cloud then uses the AWS Access Credentials to check the live state of the infrastructure on AWS.

- The live state of infrastructure is returned to Terraform Cloud, and an execution plan is created.

- Terraform Cloud returns successful build outputs to Gitlab CI/CD.

- Security checks job is triggered if the build job is successful. I am using CheckOV to perform security scanning on the Terraform templates.

- After the security checks are finished, the generate security report job is triggered to produce a security report.

- The developer can now manually trigger the deploy job to deploy the changes to the live infrastructure.

- The deploy job uses the Terraform Cloud API key to trigger the Terraform API to implement the changes to the live infrastructure.

- Terraform Cloud implements the changes to the live infrastructure on AWS.

- The outputs of the implementation returned to Terraform Cloud.

- Terraform Cloud returns back the outputs of the implementation back to Gitlab deploy job.

- After the developer finishes testing the live infrastructure, he can trigger the destroy job to remove all infrastructure to avoid unnecessary charges.

- The destroy job uses the Terraform Cloud API key to trigger the Terraform API to remove the live infrastructure.

- Terraform Cloud removes the live infrastructure on AWS.

- The outputs of the implementation returned to Terraform Cloud.

- Terraform Cloud returns back the outputs of the implementation back to Gitlab deploy job.

2.3 Pipeline Jobs

Let’s dive deep into the details of each pipeline job with code snippets.

The DevSecOps pipeline is constructed with the .gitlab-ci.yml file.

There are six stages for the six pipeline jobs. We define the six stages as shown below.

|

|

2.3.1 validate job

|

|

The first job in the pipeline is the validate job. The job is triggered when changes are pushed to the master branch

For the job script, we have terraform init -backend=false to initialize the Terraform directory.

We can then run terraform validate to check if the Terraform templates are free of syntax errors.

2.3.2 build job

|

|

If the validate job is successful, the build job is automatically triggered next.

For the job script, we have echo $TERRAFORM_CLOUD_API_KEY >> ~/.terraform.d/credentials.tfrc.json to enable the job to have a connection with the Terraform Cloud.

$TERRAFORM_CLOUD_API_KEY is a JSON object containing the Terraform Cloud token. The format is shown below.

|

|

The Terraform Cloud token is retrieved manually by running terrraform login.

We can then store $TERRAFORM_CLOUD_API_KEY inside the Gitlab Repo and refer it inside the job.

We then run terraform init to initialize the Terraform directory.

Lastly, we run terraform plan to build an execution plan. If the command fails, it means the changes are not implementable. Thus, it is impossible to deploy the changes onto AWS.

2.3.3 security checks job

|

|

If the build job is successful, the security checks job is automatically triggered next.

For the job script, we have checkov -d . to scan all Terraform templates for misconfigurations.

The command will output the scan result to the console. It first displays the number of passed checks, the number of failed checks, and the number of skipped checks.

|

|

The console output of a passed check is shown below. We have

- The name of the check

- The specific resource

- The Terraform template

- The related guide on the check

|

|

The console output of a failed check is shown below. We have

- The name of the check

- The specific resource

- The Terraform template

- The related guide on the check

- The block of Terraform codes that causes the failed check

|

|

For this job, I also set the keyword allow_failure to true. It means when this job fails aka there are failed checks, the pipeline continues without termination.

There are a few reasons why I want it in this way.

First, when we are building an MVP, the top priority is not to optimize everything. We want to push out the basic set of features first. Therefore, it is acceptable to have some failed security and compliance checks.

Second, some use cases may require configuration that leads to failed checks. For example, we may need public access to S3 objects for people to download without any restriction.

2.3.4 generate security report job

|

|

After the security checks job is finished, the generate security report job is triggered automatically.

For the job script, we have checkov -d . -o junitxml >> checkov.test.xml to generate a security report in XML format.

-o flag to -o json.Then we export it as an artifact for downloading.

If there are failed checks inside the report, the job will be failed, and the pipeline will be terminated. We can prevent that by setting allow_failure to true.

2.3.5 deploy job

|

|

When the security checks job is finished, developers can manually trigger the deploy job to get Terraform Cloud to deploy changes onto AWS.

I set the job to be manually triggered with when: manual.

The reason is that I want to involve the developers to decide when to deploy the changes after they review the outputs of the security checks job.

If the deploy job is auto-triggered after the security checks job, and there are some security or compliance issues found that are not acceptable. It will lead to unacceptable loopholes in the infrastructure.

Therefore, I set the deploy job to be triggered manually. Then developers have the responsibility to ensure the security and compliance issues reported by the security check job are acceptable before deploying the changes to the infrastructure.

This involvement of developers ensures developers produce codes that are secure and reliable. Thus, reducing potential damage caused by insecure and unreliable codes during production.

For the job script, we have echo $TERRAFORM_CLOUD_API_KEY >> ~/.terraform.d/credentials.tfrc.json to enable the job to have a connection with the Terraform Cloud.

We then run terraform init to initialize the Terraform directory.

Lastly, we run terraform apply --auto-approve to get Terraform Cloud to implement the changes onto AWS.

2.3.6 destroy job

|

|

When the deploy job is finished, developers can manually trigger the destroy job to get Terraform Cloud to remove the infrastructure created on AWS.

For the job script, we have echo $TERRAFORM_CLOUD_API_KEY >> ~/.terraform.d/credentials.tfrc.json to enable the job to have a connection with the Terraform Cloud.

We then run terraform init to initialize the Terraform directory.

Lastly, we run terraform destroy --auto-approve to get Terraform Cloud to destroy the infrastructure on AWS.

2.4 Summary of DevSecOps Pipeline

Below is the whole .gitlab-ci.yml file for the Gitlab DevSecOps pipeline.

|

|

Below is the Gitlab visualization of the DevSecOps pipeline.

Terraform Cloud allows us to decouple the infrastructure from the development.

Without Terraform Cloud, we need to store the AWS credentials on Gitlab, so the CI/CD jobs have the permission to use the Terraform template to set up infrastructure on AWS.

It can be a potential security issue. Developers who have access to Gitlab are also able to access AWS credentials.

Terraform Cloud acts as an abstraction layer before the AWS cloud. It ensures only infrastructure engineers have access to AWS directly. Thus, minimizing the attack surface.

In a nutshell, we only need to call the Terraform Cloud API inside the deploy CI/CD job. Terraform Cloud will run the Terraform templates and manage the infrastructure state, credentials, and history.

Let’s zoom out. What if we have 10 Gitlab repositories to manage ten different sets of Terraform templates. Without Terraform Cloud, we need to configure AWS credentials in all 10 Gitlab repos. It means ten groups of developers have access to the AWS credentials. With Terraform Cloud, there is only one point we need to configure the AWS credentials. Thus, the attack surface is always consistent when we create more GitOps workflow and DevSecOps pipelines.

Outro

I hope this example demonstrates how Terraform templates and the associated infrastructure lifecycle can be built securely, reliability, and systematically with the involvement of both automation and developers using GitOps and DevSecOps.

Want to support me?